In this month’s article we take a look at some of the technologies that are moving us in the direction of autonomous civilian vehicles and discover some surprises.

Background

Autonomous vehicle technology has been widely deployed in agriculture (crop spraying, tilling, fertilising, spraying, harvesting and picking), forestry, mining and large-scale earthmoving projects.

Spurred on by the US Defense Advanced Research Projects Agency (DARPA), research organisations and manufacturers invested millions of dollars and hundreds of thousands of man-hours in developing autonomous off-road and on-highway vehicle prototypes. DARPA’s goals in this initiative were to kick-start and refine the technologies necessary for autonomous vehicles to be successfully used in warfare.

DARPA’s 2004 Grand Challenge, 2005 Grand Challenge and 2007 Urban Challenge drove and showcased the rapid evolution of terrestrial vehicular autonomy. In 2004 not one entrant managed to navigate more than 12 km of the 240 km route. By the 2005 event, over a different, but equally challenging route, the technology had advanced to the point where five entrants finished the course. The 2007 Urban Challenge tasked autonomous vehicles with operating in compliance with rules of the road in an urban environment with other merging traffic, traffic circles and intersections. In this latter contest there were three vehicles that successfully completed the course within the stipulated six-hour time limit and a further three that finished after the official cut off.

Real-world autonomous military vehicles now operate in war zones like Afghanistan, employing the technologies developed though the DARPA initiatives. Key elements that facilitate this autonomy are:

* Microcontrollers – for signal I/O and data processing.

* Vehicle buses – for intercommunication between the many on-board microcontrollers.

* Advanced sensor technology – including radar, lidar/ladar, cameras and distance/proximity sensors.

* Wireless – for Vehicle to Vehicle (V2V), Vehicle to Infrastructure (V2I)/Infrastructure to Vehicle (I2V) (aka Vehicle to Roadside – V2R) and Vehicle to Centre (V2C) communications.

* GPS – for spatial awareness.

Civilian vehicle platform

Over the past decade or so, many of the sub-systems in the cars we drive have transitioned from mechanical sub-systems to either electro-mechanical subsystems or to fully electrical/electronic subsystems. Although these changes are often not apparent, they are pervasive. Take a close look at your accelerator pedal and you will probably find that it is just operating a big variable resistor or LVDT with connections to one of many ECUs (electronic control units) dotted about your car. The throttle butterfly is probably driven by a small electric motor or linear actuator controlled by the same ECU. And do not expect to find any mechanical linkages between the gear lever and gearbox on your automatic – it is all done by micro-switches and solenoids with a transmission ECU in between.

Modern cars often have 15 or more of these ECUs communicating with each other and with diagnostic interfaces over an automotive communication bus such as CAN or FlexRay. The OPEN (One Pair EtherNet) Alliance is promoting Ethernet to replace these traditional automotive buses.

The presence of this integrated, networked control system architecture and growing processing power, along with the development of low cost on-chip solutions suited to volume manufacturing has enabled rapid growth in vehicle intelligence.

What is driving autonomy?

Multiple motivators are driving the adoption of technologies leading toward fully autonomous vehicle operation. These motivators include calls for accident rate reduction, accident mortality rate reduction, accident injury reduction, reduction in pedestrian involvement in accidents, a need to reduce traffic densities and improve traffic flows, a need to reduce emissions and to reduce trip fuel consumption, and calls for multinational region-wide integrated vehicle identification for legal and commercial aspects such as e-tolling.

Convergence

Despite the very different nature of military and civilian vehicle uses, there has recently been significant convergence in the two fields of application.

Military goes civilian

Oshkosh TerraMax unmanned ground vehicle (UGV) technology, which supports both autonomous and semi-autonomous convoy operational modes, made its international début in February 2013 and is a state-of-the-art example of the application of autonomous vehicle technology. [Figure 1].

A key statement in Oshkosh’s February 11th press release announcing this début illustrates the close parallels between military technology and its application in civilian vehicles: “Oshkosh also is transitioning technologies from the TerraMax UGV system to provide active-safety features for the manned operation of vehicle fleets, including electronic stability control, forward collision warning, adaptive cruise control and electric power-assist steering.”

Civilian goes military

One of the ways that fuel consumption can be reduced (through reduced drag) under highway conditions is operating vehicle convoys with smaller inter-vehicle spacing. The lead vehicle is driven by a driver and the remaining vehicles closely follow the leader or the immediately preceding convoy member semi-autonomously. The recently concluded multi-year European Union-funded SARTRE (Safe Road Trains for the Environment) project achieved a major milestone in May 2012, when a vehicle platoon (convoy) comprising two Volvo trucks and three Volvo passenger cars was successfully tested on a motorway outside Barcelona, Spain, over a 200 km route operating at speeds of up to 85 kmph at a 6 m vehicle spacing. [Figure 2]

ADAS

Advanced Driver Assistance Systems (ADAS) are systems designed to help drivers avoid or reduce the impact of accidents and avoid traffic violations. They may also assist in reducing fuel consumption through intelligent speed advice/speed adaptation and traffic condition awareness (eg, routing around traffic congestion).

Many of these systems can be seen as building blocks on the road to autonomous vehicle operation.

The functions of ADA systems include, but are by no means limited to:

* Adaptive cruise control

* Collision warning

* Collision avoidance

* Lane departure warning

* Pedestrian detection

* Lane change assistance

* Driver drowsiness detection

* Traffic sign recognition

* Blind spot detection

* Adaptive light control.

In discussing some of the key ADAS functions we will touch on the underlying technology that enables them. In many instances the same hardware infrastructure serves to enable multiple functions. For instance one or more forward facing cameras may be the primary sensor subsystem supporting adaptive cruise control, collision warning and lane departure warning.

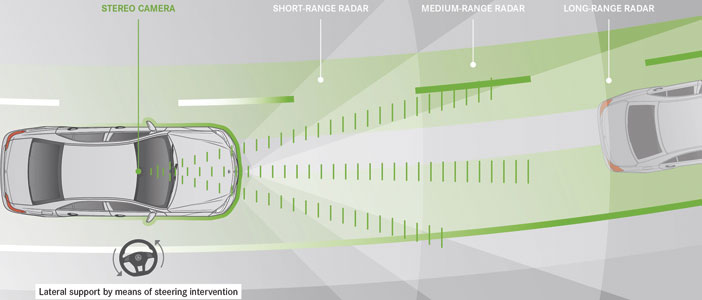

Adaptive cruise control (ACC)

ACC allows a vehicle to maintain a set speed (through active control of both accelerator and braking) where there is no preceding vehicle. It overrides the set speed to ensure a safe following distance or safe vehicle separation time if a preceding vehicle is present. Newer ACC systems can even be engaged in stop-start traffic conditions, making the driver’s task easier and eliminating low speed bumper bashings. The sensing technology for ACC may be one or more cameras, lidars or radars or a fusion of two technologies such as radar and camera sensors.

Honda’s 2013 Accord uses a radar sensor for ACC; BMW’s Active Cruise Control with Stop & Go Function uses three forward looking radar sensors while Daimler’s Distronic Plus utilises two short range, wide angle radar and one variable angle, long range radar. [Figure 3] Automotive radar systems typically operate in the 24 GHz or 77/79 GHz bands.

In November 2012, IHP Microelectronics announced that through participation in the EU-funded SUCCESS (Silicon-based ultra-compact cost-efficient system design for mm-wave sensors) project it had developed a complete radar system on a chip, which could be produced for approximately €1 (ZAR12). Significantly this chip incorporates extensive self-testing – something not easily incorporated in high frequency chips – and all the HF circuitry is in the package, which means that application engineers only need to concern themselves with the LF interfaces.

Typically ACC system sensors cover forward ranges of 100 to 250 m.

Collision warning/prevention systems

The difference between collision warning and collision prevention is in the degree of autonomous action the vehicle systems take when an impending collision scenario is recognised. Warning systems rely on the driver taking the necessary actions to prevent a collision after having alerted him/her (with flashing lights, GUI, buzzers and seat or steering wheel vibration) to the scenario. Prevention systems involve autonomous action by the vehicle systems and may involve braking and or directional control through steering movement or asymmetrical braking. At the same time various safety systems may be readied for immediate action: the pressure in brake hydraulic reservoirs and cylinders may be primed to minimise brake application delay once the driver treads on the stop pedal and safety restraints may be pre-tensioned.

The sensors used for these functions may be one or more cameras, lidars, radars or some fusion of these – and for very short distances ultrasonic park sensors my come into play.

The 2013 Honda Accord uses a forward facing camera mounted between the rearview mirror and windscreen to detect potential forward collisions. The BMW Adaptive Brake Assist uses the same radar sensors used by their Active Cruise Control, while Daimler’s PRE-SAFE Brake and BAS PLUS Brake Assist work together using the same three radar sensors used by Distronic Plus to initially warn and then actively prevent forward collisions.

Lane departure warning

The goal of lane departure warning (LDW) systems is to alert the driver if he/she starts to change lane without signalling with the appropriate turn indicator or through analysis of driver-steering wheel activity. The systems presume that such an act is unintentional and caused by driver inattention or drowsiness.

Lane departure warning systems are based on forward facing cameras which monitor lane markings and can therefore detect if the host vehicle strays out of its lane.

The 2013 Honda Accord uses its forward facing camera as the primary sensor and an LDW dashboard indicator and buzzer to alert the driver. BMW’s LDW system uses a forward facing camera and alerts the driver through steering wheel vibration. Daimler’s Lane Keeping Assist utilises a camera mounted between the rearview mirror and windscreen to monitor lane markings. When an unintentional lane departure is detected it vibrates the steering wheel and if no corrective driver action takes place it applies brakes on one side of the vehicle to attempt to bring it back into its lane.

Similar technology and algorithms are used for lane centring on fully autonomous vehicles.

Pedestrian detection

Pedestrian, cyclist and even stray animal detection systems are gradually being introduced into passenger vehicles. At the time of writing these systems are primarily the domain of high-end vehicles and fully autonomous military, agricultural, mining and industrial vehicles, but you can expect to see their rapid proliferation in the EU in the next year or so.

The underlying sensor technology for pedestrian detection varies between manufacturers. Volvo’s latest active cyclist and pedestrian protection system announced in March 2013 uses a fusion of radar and high-resolution camera technology coupled to active vehicle braking. Daimler’s passive Night View Assist uses an infrared camera in conjunction with two infrared headlamps to detect both warm items (pedestrians, cyclists and animals) and cold items (like rocks or dropped cargo) in the vehicle’s path at distances of more than 150 m.

Image processing

The real-time processing of sensor data from lidar, radar, infrared cameras and visible light cameras is processor-hungry work – especially when it involves the fusion of data from multiple sensors or sensor technologies. And who do we find there but organisations like Analog Devices, Toshiba and NVIDIA?

For 2012, European NCAP (New Car Assessment Program) raised the bar for pedestrian protection and according to Analog Devices, “[M]any vehicles rated 5 star by NCAP just last year would lose one of the stars if re-rated using the new 2012 Euro NCAP metrics.”

A February 2013 news release from Toshiba predicts that initiatives like the European NCAP requirement for the incorporation of active braking systems in 2014 will drive a 50 to 100% expansion in the global market for image recognition processors between fiscal year 2010 and 2015.

NVIDIA’s Tegra processor technology has already been incorporated in the Tesla Motors Model S electric sedan where the NVIDIA Tegra Visual Computing Module (VCM) powers the vehicle’s 17-inch touch screen infotainment and navigation system and its all-digital instrument cluster. In January 2013 the company announced that Audi AG has selected the NVIDIA Tegra 3 mobile processor to power in-vehicle infotainment systems and new digital instrument clusters that will replace traditional dashboard gauges across its full line-up of vehicles worldwide starting this year.

A way to go

In South Africa, of course, we have a way to go. Functions like active lane centring require well-marked roads, but the spin off from pedestrian detection systems and the like will surely benefit all road and roadside users.

Resources

DARPA: Home page, www.darpa.mil

Analog Devices: Home page, www.analog.com

IHP Microelectronics: Home page, www.ihp-microelectronics.com

Oshkosh Defense: Home page, www.oshkoshdefense.com

Toshiba: Home page, http://www.toshiba.co.jp

| Tel: | +27 11 543 5800 |

| Email: | [email protected] |

| www: | www.technews.co.za |

| Articles: | More information and articles about Technews Publishing (SA Instrumentation & Control) |

© Technews Publishing (Pty) Ltd | All Rights Reserved