Emerging challenges for data acquisition networks have come to include guaranteeing data acquisition across the Internet and building automation interoperability to work with the cloud. As an environment less stable and far less secure than circumscribed LANs, working ‘in the cloud’ has forced a reconsideration of communication parameters like timeout margins, connection checks and packet validation as guarantees for robust and secure data acquisition are re-engineered for use on public networks. Interoperability presents another, related challenge: system integrators are commonly called upon to custom-code sockets for a wide variety of client hardware, costing clients time and money in installation and troubleshooting. For all these reasons, thoroughly tested, standardised server software and data processing clients can introduce important simplifications that give tangible benefits in deployment, network efficiency and connection reliability.

Push-based data acquisition for robust and efficient networks

The push-based data acquisition model is a networking design innovation that reduces data congestion to give better connection reliability and higher throughput. Push technology shifts the responsibility for data updates from the OPC server to individual remote I/O and RTU controllers. Instead of steadily sending out polling requests to each remote device (and occupying precious bandwidth), the remote I/O and RTU controllers themselves poll the attached I/O modules and send reports back to the OPC server only as required.

With effectively engineered push technology, I/O status gets updated when one of three events occurs:

* An I/O status change is reported by a sensor.

* A pre-configured interval is reached.

* A request is issued by a user.

This shift in software architecture cuts metadata transmissions across the network, freeing up bandwidth for faster I/O response times and fewer dropped packets. With I/O sensors that broadcast information only when required, alarms and data updates are all expedited, maximising system response time for significant improvements.

Streamlining database functions

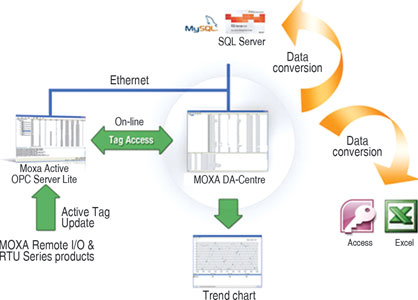

Automated database functions are another way to achieve more reliable connectivity. During daily operations, most remote data acquisition systems require periodic human interaction and management for remote data collection and/or database uploads. In this process, software is often required to facilitate conversion and logging. So, providing automatic solutions to manage this work makes it simpler, quicker, and, if properly engineered to help with day-to-day system administration and data analysis, also more robust.

A database gateway functions as a bridge between delivered data and the relational databases into which data is converted and collated. To be effective, gateways should be a simple-to-configure client that performs preliminary processing and tagging and, when needed, may automatically retrieve and process I/O data for storage either in the central database or locally, for on-demand analysis. Database gateways can thus further reduce deployment efforts, while guaranteeing critical I/O data is more immediately available to administrators and users.

Saving data from spotty networks

Finally, because Internet failures are still relatively commonplace, a robust mechanism for offline data-collection is an important mitigating feature for cloud-based data acquisition networks. The most obvious and straightforward approach would be for the remote I/O module or RTU to maintain its data collection activity and store that data for re-transmission when the network goes back on-line. Oddly, few automation software packages currently offer this functionality; yet this feature is quite simply implemented on push-based systems, and central to Moxa’s Active OPC Server and DA-Center software package.

A real-world example

Here is how an actual implementation might work with all three techniques employed. A large solar power grid with hundreds of solar panels means a large number of I/O points are involved, so using traditional custom-coded polling techniques introduces high deployment and maintenance costs. Instead, smart I/O modules and RTU controllers like the ioLogik W5300 can use push-based data acquisition to report solar panel temperature and status over the network. Coupled with Moxa’s bundled Active OPC Server, users can access the status of all solar panels in the field in a network-efficient manner, while built-in offline storage fail-safes ensure that up to 32 Gb of data may be supplemented should network connectivity be lost. Further, DA-Center software (also free) can be installed alongside Active OPC Server to automate the conversion, analysis and storage of this data for fast availability.

| Tel: | +27 11 781 0777 |

| Email: | [email protected] |

| www: | www.rjconnect.co.za |

| Articles: | More information and articles about RJ Connect |

© Technews Publishing (Pty) Ltd | All Rights Reserved