During my days as a process engineer, I worked on an amino acid fermentation plant where it was very important to keep the process sterile at all times otherwise entire batches could be lost to contamination. Dozens of automated diaphragm valves were used to control raw material feed into the fermenters, CIP (clean in place) and steam used to sterilise the equipment. The valve diaphragms were made of a special EPDM-PTFE compound which had a limited life-time. Predicting when to replace the valve diaphragms was important to prevent pinhole leaks developing which could contaminate the system.

At first, the maintenance team replaced the diaphragms on a fixed schedule. But we knew that the useful life of the diaphragms was related to the number of valve cycles (open/close); and the total time that the diaphragm was exposed to high temperatures (steam). This data was readily available on the DCS system. It was not long before we analysed the historian data to try and predict the remaining life of the diaphragms. This promised to be a worthwhile exercise; the diaphragms were expensive, and we fully expected to reduce the number of changes without increasing the risk of contamination. The maintenance schedules were, as a result of this work, adjusted based on actual usage data. In a small way we were using the principles of predictive analytics to optimise planned maintenance schedules.

Breakdowns and equipment failures can be costly across all industries. In transportation, for example, the premature failure of a jet-engine can cause cancelled flights and delays, as well as being a safety concern. In process industries, a critical compressor failure can result in the shutdown of an entire plant. In power generation, the large high-speed turbines can take months or even years to replace, resulting in lost capacity and revenue.

Predictive analytics for planned maintenance is a technique that uses data to understand equipment degradation and predict failure. By better understanding the risk of failure, preventative maintenance can be scheduled at the best possible time to reduce disruption and therefore costs.

In the past few years the use of predictive analytics has grown. This trend has been underpinned by three main technology drivers:

1. Better field instrumentation that includes smart sensors connected to the Internet.

2. Cloud-based IoT platforms capable of streaming and analysing large volumes of data through the web.

3. Better connectivity and the ease by which the operating parameters of equipment can be remotely monitored by service providers.

Using predictive analytics to plan maintenance makes the most sense in asset intensive operations such as transport, construction, chemicals, utilities, mining and so on. The techniques are equally applicable to continuous processes, as well as in discrete/batch manufacturing.

Predictive analytics has the most impact on the bottom line when used to predict failure of high value or critical components, where the impact of any downtime is significant. For example, the jet engines of a plane, the turbine generators in a power station, and key processing equipment like pumps or compressors in a chemical plant.

Predictive analytics can be applied at many levels, for example at the component level (e.g. turbine shaft vibration), at the equipment level (e.g. turbine output) or at the system level (generation plant efficiency). Deciding on where to apply the techniques requires an evaluation of the individual systems, equipment or components, together with an assessment of the available data sources. It is also important to assess the likelihood that a suitable artificial intelligence (AI) machine learning model will accurately be able to model the system and yield the desired results.

What is predictive analytics?

Predictive analytics is not magic. It uses established mathematical techniques to process data in a smarter way. In maintenance, it can manifest itself in a number of forms, one of which is called ‘condition monitoring’.

By understanding equipment condition and degradation patterns, it is possible to determine the risk of failure i.e. where it will fail, when it will fail and why it will fail. When there is sufficient confidence in the results of the analysis, maintenance can be rescheduled or deferred. Ultimately the goal is to make better maintenance decisions based on data.

The statistical techniques used include:

• Trend analysis and regression – projecting future performance based on past trends in the data.

• Pattern recognition – looking for patterns in the data that indicate abnormal operating conditions that could lead to imminent failure.

• Performance limits – triggering an alert when some parameter moves out of normal performance boundaries (anomaly detection).

• Prioritising – evaluating multiple options to prioritise maintenance work.

• Binary classification e.g. establishing whether or not something needs maintenance or not based on a complex set of input variables.

• Multiclass classification – predicting the probability of failures and failure classes over time.

Where predictive analytics makes the most sense

Predictive analytics is not necessarily a technique that should be applied to every item of equipment. The areas where the technique is most likely to yield good results include:

1. Moving machinery (where it is relatively easy to measure vibration, operating conditions etc.).

2. High capital cost items.

3. Critical equipment in a process where there is a high opportunity cost of breakdown.

4. Where the vendor already has built a good machine learning model and who can already provide a packaged solution.

5. Maintenance of specialised equipment under service contract (outsourced).

6. Failures where the root cause variables are well understood.

The benefits of predictive analytics in maintenance

There are very real business benefits to a predictive analytics system. These include:

1. Improved product quality and service levels.

2. Improved availability of equipment.

3. Early detection of expensive failure.

4. Better planning of the replenishment of assets.

5. Reduction of spare parts inventory.

6. Improved data to support warranty claims.

7. Increased life of assets and reduced use of consumables.

8. Reduced maintenance and downtime costs with better forecasting and budgeting.

How does predictive analytics actually work?

Predictive analytics is a technique used by data scientists to predict future events using statistical techniques. The common approach is to build a model based on a number of input data streams which are related to degradation/failure. After sufficient training, the model has ‘learned’ the system and can then theoretically be used to make predictions.

Training requires real-world data. For example, if you are going to predict breakdowns, then the model should be trained with data that includes some actual breakdown events: the more data that can be provided to train the model the better. In certain high-impact scenarios (e.g. aircraft engine manufacture) it is possible to do multiple experiments at sufficient scale that involve actual engine failures to train the model. In other scenarios (e.g. predicting the failure of a nuclear reactor) such experiments would for obvious reasons not be practical, and other training techniques will be better.

The modelling process will involve some processing of the input data, some clean-up/transformation, modelling and then interpretation/visualisation of the output in a form that makes sense to us humans.

Rarely is this data capture process automated. Some human analysis is needed at the outset to decide on what input variables to measure, what modelling technique will be required and how to format the output. In many maintenance situations the only training data that might be available will be stored in legacy systems and probably not in the right format – for example it might be necessary to manually work through work order data from the maintenance system. This process can consume many hours before sufficient understanding of the system is developed, and enough data is collected for training of models.

Examples of historical data that might be useful input to develop a model:

• Failure history.

• Repair history.

• Condition of the machine.

• Operating conditions/context.

• Machine specifications.

• Operator parameters.

When building a system, it is very important at the outset to understand exactly what is going to be asked of the model. Each breakdown scenario needs to be defined exactly. This means that you have to have a very clear (‘sharp’) question in mind. For example: “At 90% confidence level, how many more hours can we run this motor before the bearings are no longer within specification?” Not, “Show me where things might go wrong.”

The data being measured also needs to be reliable, accurate, complete and related to the specific failure. It is no good measuring vibration when a better leading indicator might be running temperature, for example. Your measurements should also be at the right level (i.e. at component level or at system level). It is unlikely (but not impossible) that you will get meaningful data at system level that will be able to accurately predict individual component failures.

When developing the model, you also need to know exactly how far in advance you need to do predictions. The further ahead, the more uncertain the prediction will be. It might make little sense to use analytics to predict failure a year in advance when routine inspections are done monthly.

Near real-time predictive analytics is only feasible when sensors are connected, manual data capture will delay the forecasting process and could introduce errors. Fortunately, with smart sensors and a good IoT enabled infrastructure, it is now more feasible to connect sensors to artificial intelligence models than ever before.

In many cases there is no need for online ‘real-time’ analytics. The processing costs need to be weighed up against the impact of only running the models daily (for example).

Cloud platforms that support predictive analytics models

Advanced analytics systems can be embedded in the equipment package, or can be run on local servers, or on cloud servers.

Cloud-based analytics processing is most suited for data sources that stream data from multiple locations to the web, such as the scenario where an equipment supplier remotely monitors their own specialised equipment under a maintenance contract. Cloud-based systems are also useful where the necessary processing power (servers) makes it uneconomical to build the infrastructure in-house and where economies of scale in large data centres can be leveraged.

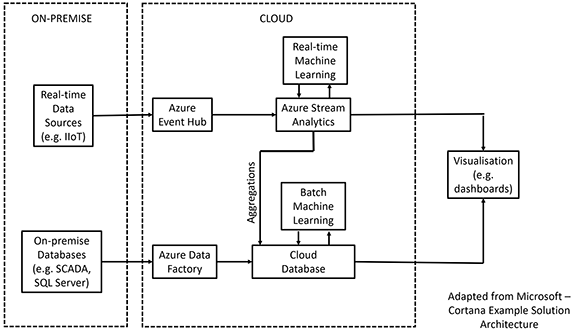

An example of a cloud-based predictive analytics platform is Microsoft Azure, incorporating Azure data factory, Azure event hub, Azure machine learning, Azure stream analytics and Power BI. Solutions from the other enterprise software providers can also be considered, such as IBM’s Predictive Analytics, Oracles Asset Performance Management, Infor’s Enterprise Asset Management solution, SAP Predictive Maintenance and Service, and so on. Several providers of process control equipment also offer predictive analytics solutions that can run on premise or in the cloud.

Figure 1 shows an example of a cloud predictive maintenance architecture based on Microsoft Azure and Cortana Intelligence Solution. The on premise data is processed in real-time through the event hub and stream analytics. This is aggregated with batch data which is derived from on premise databases and stored in a cloud database for machine learning and further analysis. A single visualisation tool is used to aggregate and display the results of the analysis.

A few practical considerations

Predictive analytics is not going to be suitable for all situations. Your company needs to have mature processes that can use the output of the models in a way that fully takes advantage of the new information. There also needs to be sufficient skill within the business to develop, maintain and support the systems.

Models need to be trusted by those responsible for operations decision making. If the predictive analytics model does not make sense, managers may ignore the system and rely on tried and trusted techniques learned from their own experience. Unreliable models can worsen the situation and be very disruptive to production.

A predictive analytics project can be a data scientists dream; however, it is important at the outset to quantify the business benefits. Ultimately any such project needs to be business driven and not technology driven.

Conclusion

Predictive analytics in maintenance is becoming mainstream as a result of enabling technologies. There are many benefits to understanding exactly when something is likely to fail, leading to more effective maintenance, reduced costs and improved production/service levels. In order to get the business benefits of such a system it is important to understand the limitations of the technology and where it should be applied. Cloud-based analytics processing can be a game-changer in making predictive analytics more accessible to a business, but this needs to be supported by a good business case and mature processes that will make proper use of the output of the models.

Gavin Halse

Gavin Halse is a chemical process engineer who has been involved in the manufacturing sector since mid-1980. He founded a software business in 1999 which grew to develop specialised applications for mining, energy and process manufacturing in several countries. Gavin is most interested in the effective use of IT in industrial environments and now consults part time to manufacturing and software companies around the effective use of IT to achieve business results.

For more information contact Gavin Halse, Absolute Perspectives, +27 (0)83 274 7180, , www.absoluteperspectives.com

© Technews Publishing (Pty) Ltd | All Rights Reserved